Day 1 - The history of neural networks

The Artifician Neuron

- One or more binary inputs.

- Binary output that activates when more than a certain number of inputs are active.

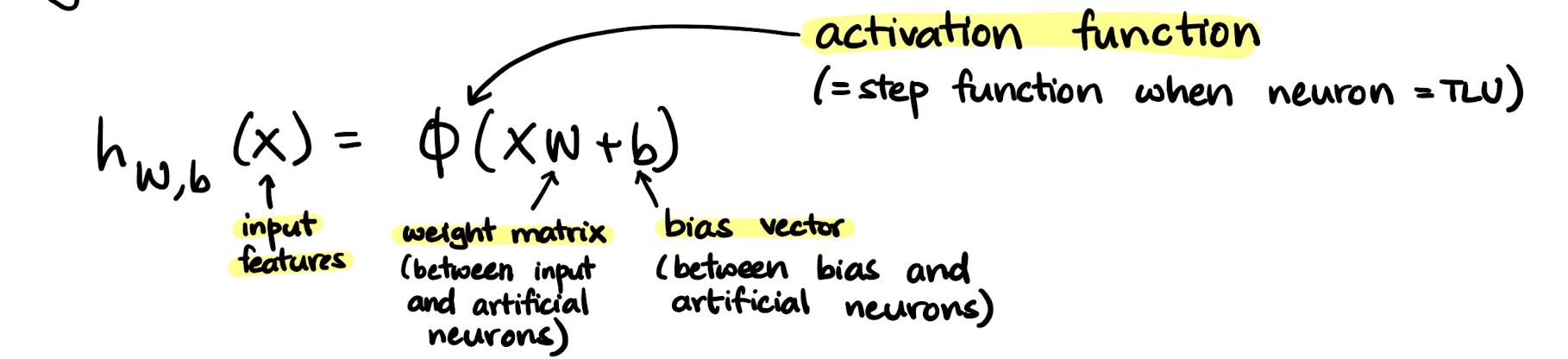

Threshold Logic Unit (TLU)

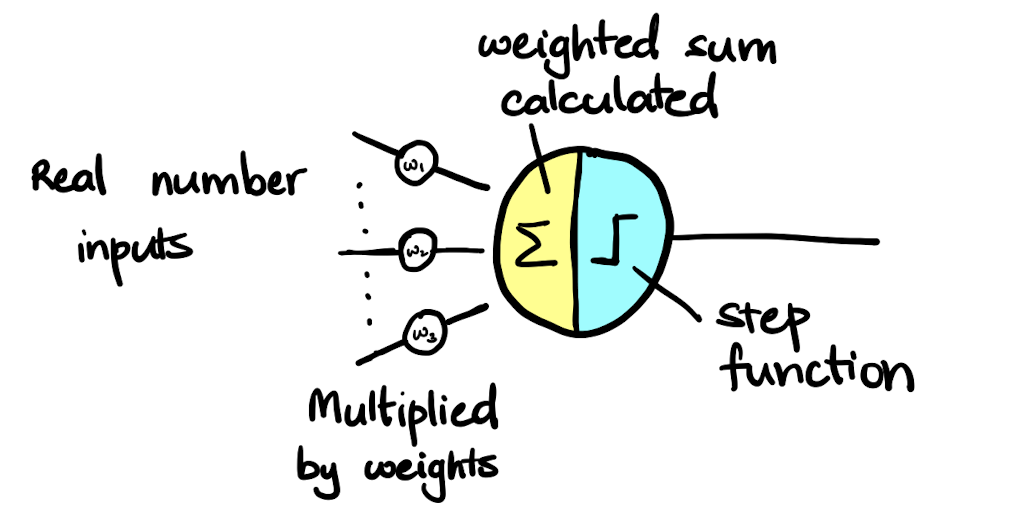

- Real number inputs.

- Each input multiplied by a weight, and weighted sum calculated.

- Weighted sum passed through a step function (e.g. heaviside function or sign function).

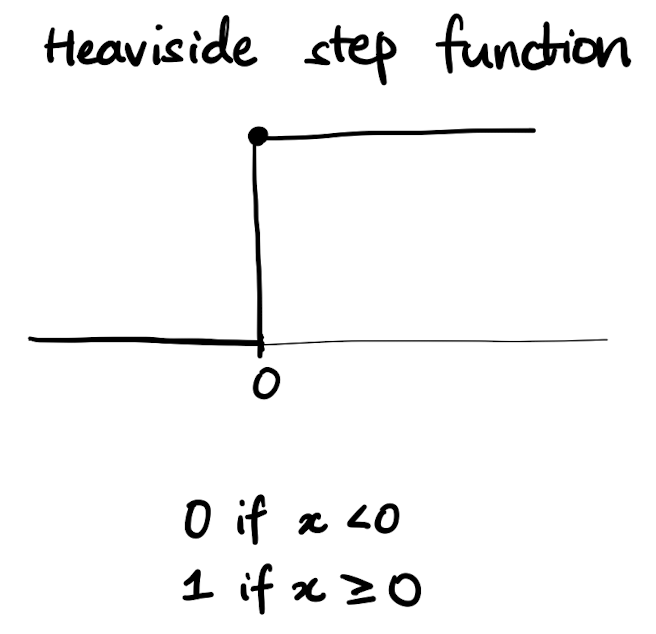

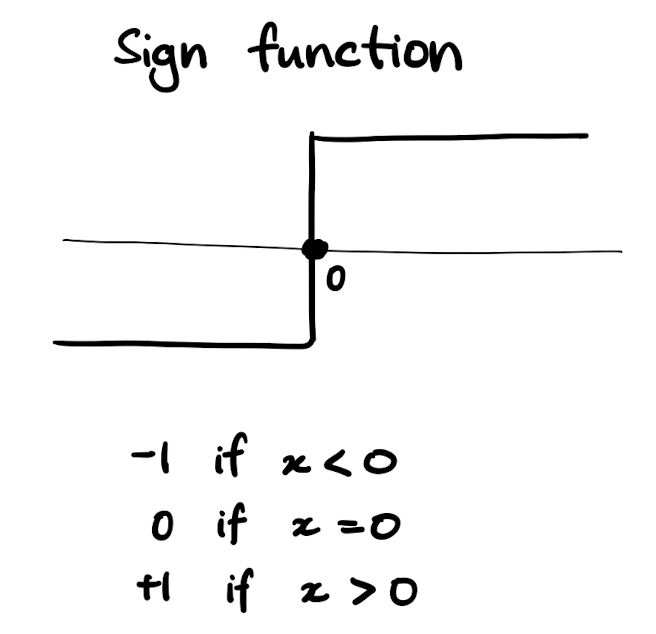

Examples of step functions:

TLUs can be used for simple linear binary classification.

- TLUs compute linear combination of inputs.

- If the result exceeds a certain threshold, positive class is predicted.

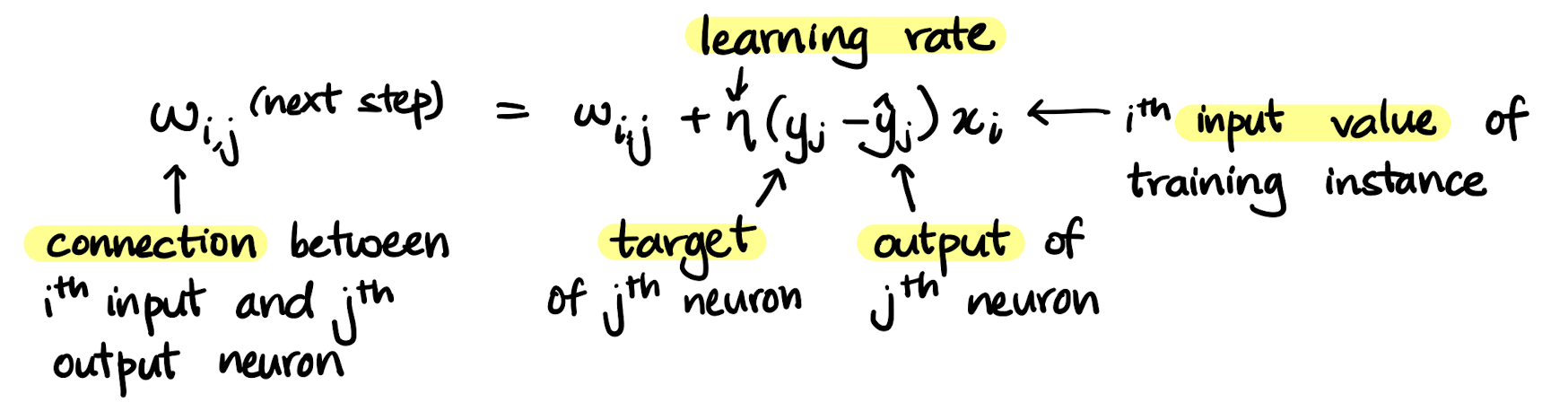

- Training the TLU means finding the right weights.

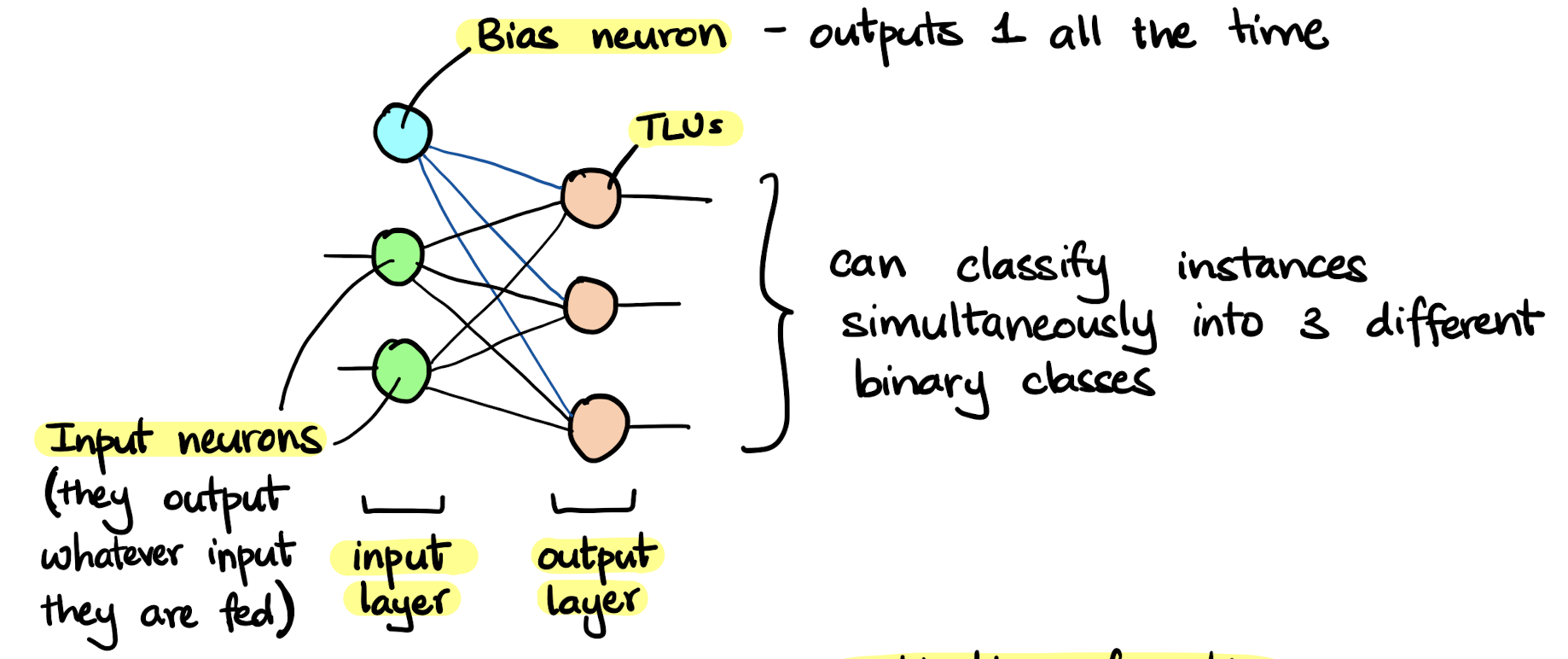

The Perceptron

- Made up of a single dense layer of TLUs (each TLU connected to all inputs).

- The decision boundary of each output neuron is linear. As a result, they are incapable of learning complex patterns (e.g. XOR gate).

- Predictions are based on hard thresholds (no class probabilities).

- Trained using the Perceptron Learning Algorithm (PLA).

- Perceptron convergence theorem: if training instances are linearly separable, PLA will converge to a solution.

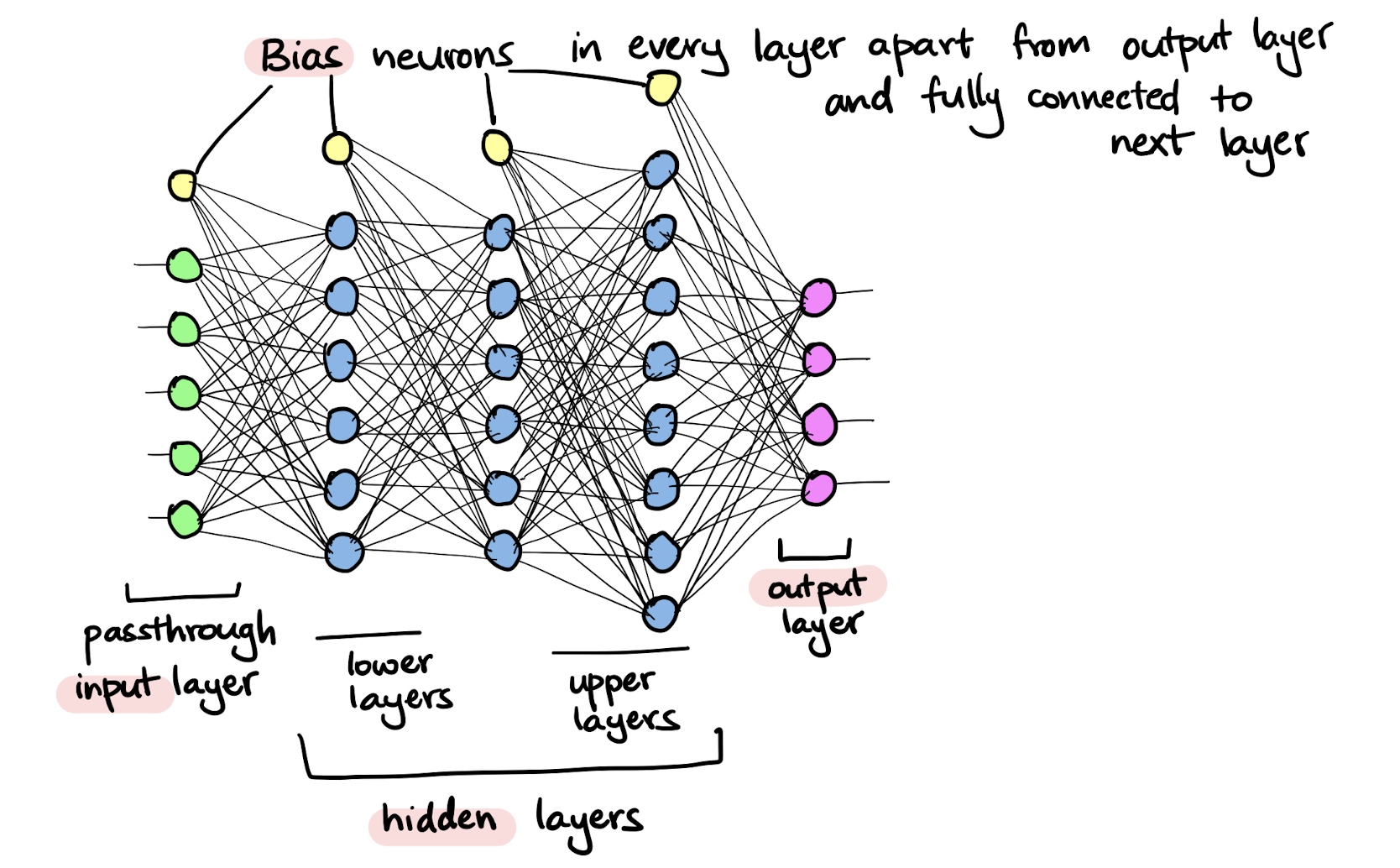

Multilayer Perceptrons (MLP)

- Obtained by stacking multiple perceptrons.

- Is a feed forward neural network - signal flows in only one direction (input to output).

- Trained using the backpropagation algorithm.